Grasp to Act: Dexterous Grasping for Tool-Use

Abstract coming soon. Please visit the project page for the latest details and media.

2025, IEEE, Robotics and Automation Letters (RA-L) — Under Review

FruitTouch: A Perceptive Gripper for Gentle and Scalable Fruit Harvesting

The automation of fruit harvesting has gained increasing significance in response to labor shortages. We propose FruitTouch, a compact gripper that integrates high-resolution, vision-based tactile sensing through an optimized optical design. This configuration accommodates a wide range of fruit sizes while maintaining low cost and mechanical simplicity. Tactile images from an embedded camera provide rich information for real-time force estimation, slip detection, and softness prediction. We validate the gripper in real-world cherry tomato harvesting experiments, demonstrating robust grasp stability, effective damage prevention, and adaptability to challenging agricultural conditions.

2025, IEEE, Robotics and Automation Letters (RA-L) — Under Review

GelSLAM: A Real-Time, High-Fidelity, and Robust 3D Tactile SLAM System

Accurately perceiving an object's pose and shape is essential for precise grasping and manipulation. Compared to common vision-based methods, tactile sensing offers advantages in precision and immunity to occlusion when tracking and reconstructing objects in contact. This makes it particularly valuable for in-hand and other high-precision manipulation tasks. In this work, we present GelSLAM, a real-time 3D SLAM system that relies solely on tactile sensing to estimate object pose over long periods and reconstruct object shapes with high fidelity. Unlike traditional point cloud-based approaches, GelSLAM uses tactile-derived surface normals and curvatures for robust tracking and loop closure. It can track object motion in real time with low error and minimal drift, and reconstruct shapes with submillimeter accuracy, even for low-texture objects such as wooden tools. GelSLAM extends tactile sensing beyond local contact to enable global, long-horizon spatial perception, and we believe it will serve as a foundation for many precise manipulation tasks involving interaction with objects in hand.

2025, Under Review

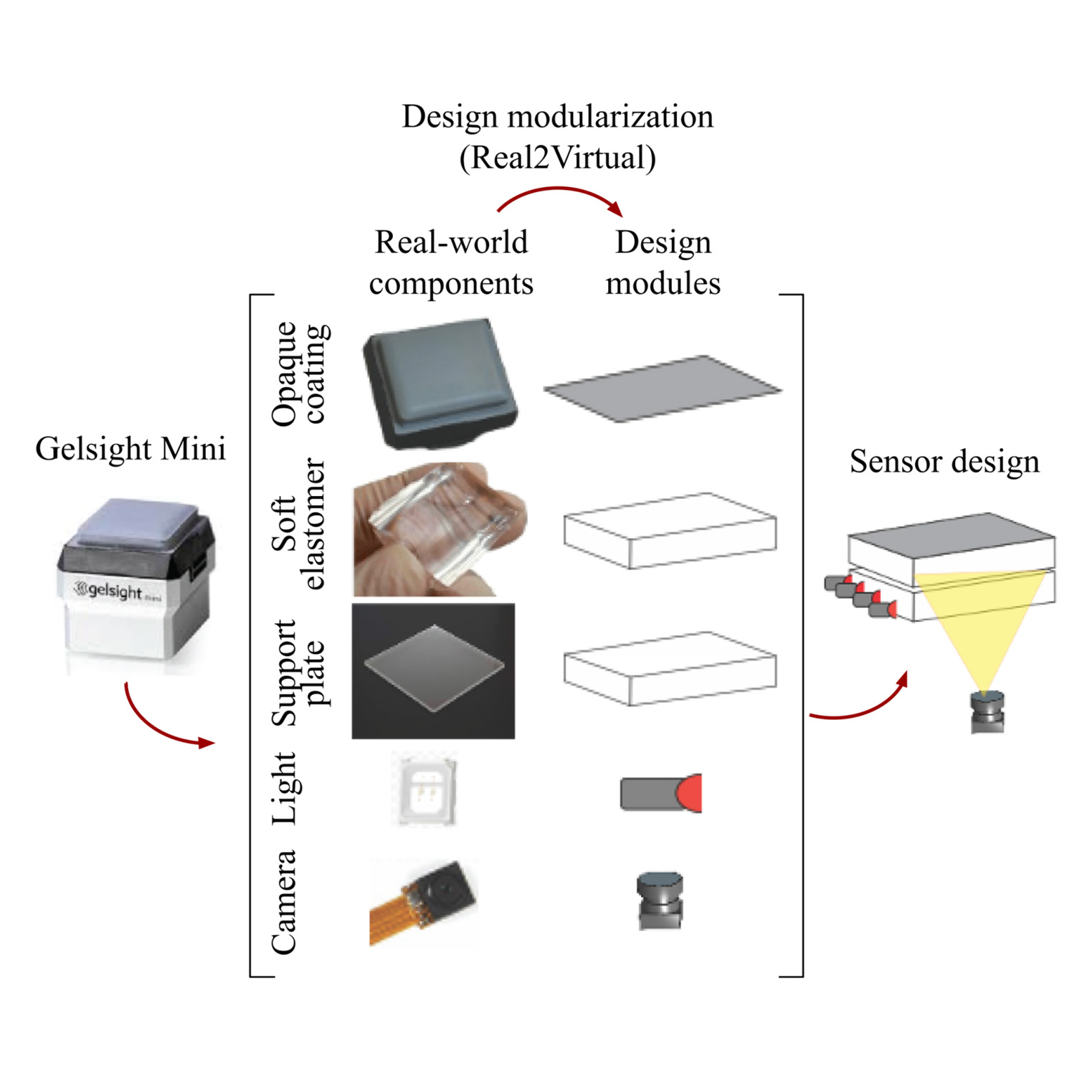

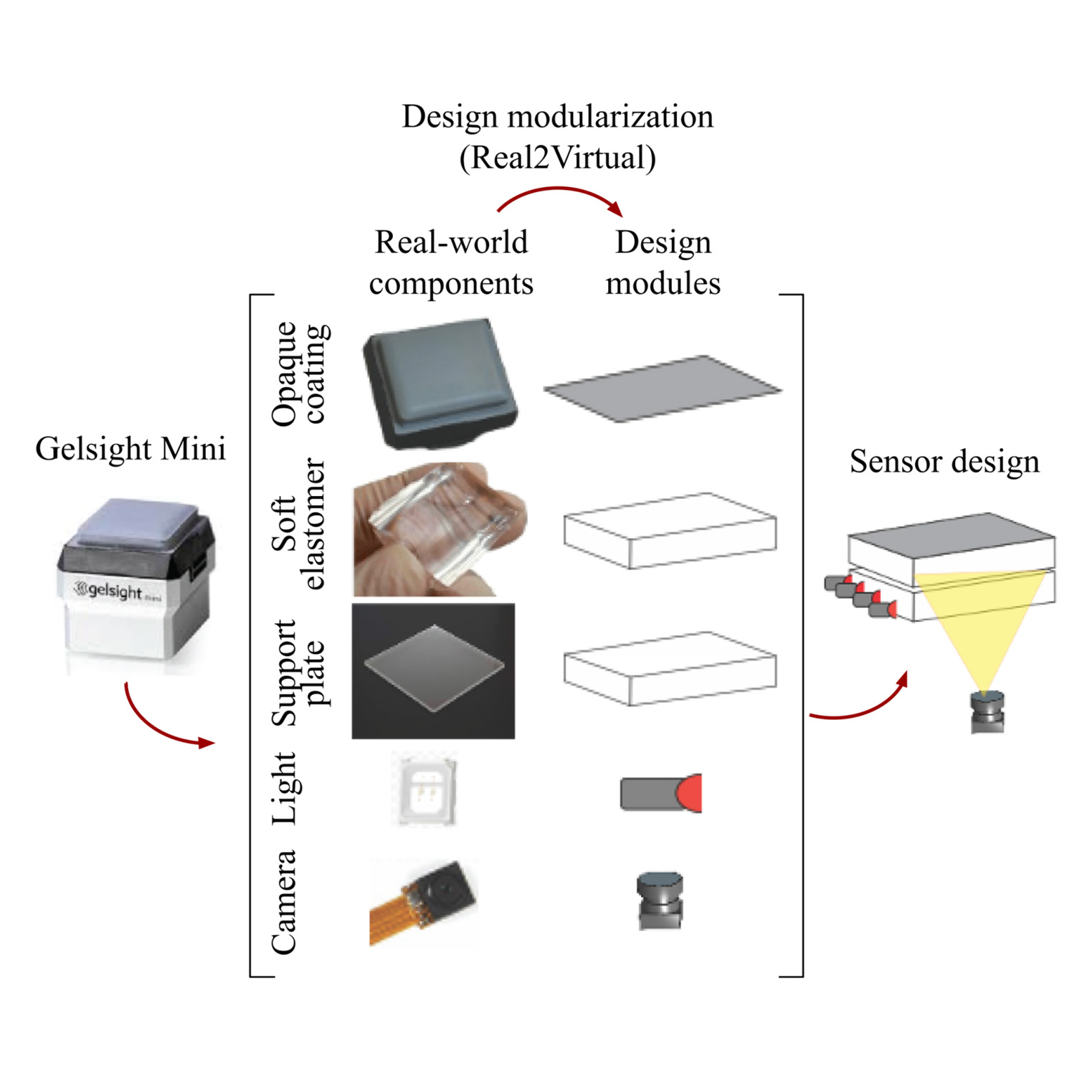

A Modularized Design Approach for Vision-based Tactile Sensors

Vision-based tactile sensors have proven to be important for multiple robot perception and manipulation tasks. Those sensors are based on an internal optical system and an embedded camera to capture the deformation of the soft sensor surface inferring the high-resolution geometry of the objects in contact. However, customizing the sensors for different robot hands requires a tedious trial-and-error process to re-design the optical system. In this paper, we formulate this sensor design process as a systematic and objective-driven design problem and perform the design optimization with a physically accurate optical simulation. The method is based on modularizing and parameterizing the optical components of the sensor and designing four generalizable objective functions to evaluate the sensor. We implement the method with an interactive and easy-to-use toolbox called OptiSense Studio. With the toolbox, non-sensor experts can quickly optimize their sensor design in both forward and inverse ways following our predefined modules and steps. We demonstrate our system with three different GelSight sensors by quickly optimizing their initial design in simulation and transferring it to the real sensors. The toolbox will be public upon publication to foster the growth of the co-design of tactile sensors and robot structures.

2025, Sage, The International Journal of Robotics Research (IJRR)

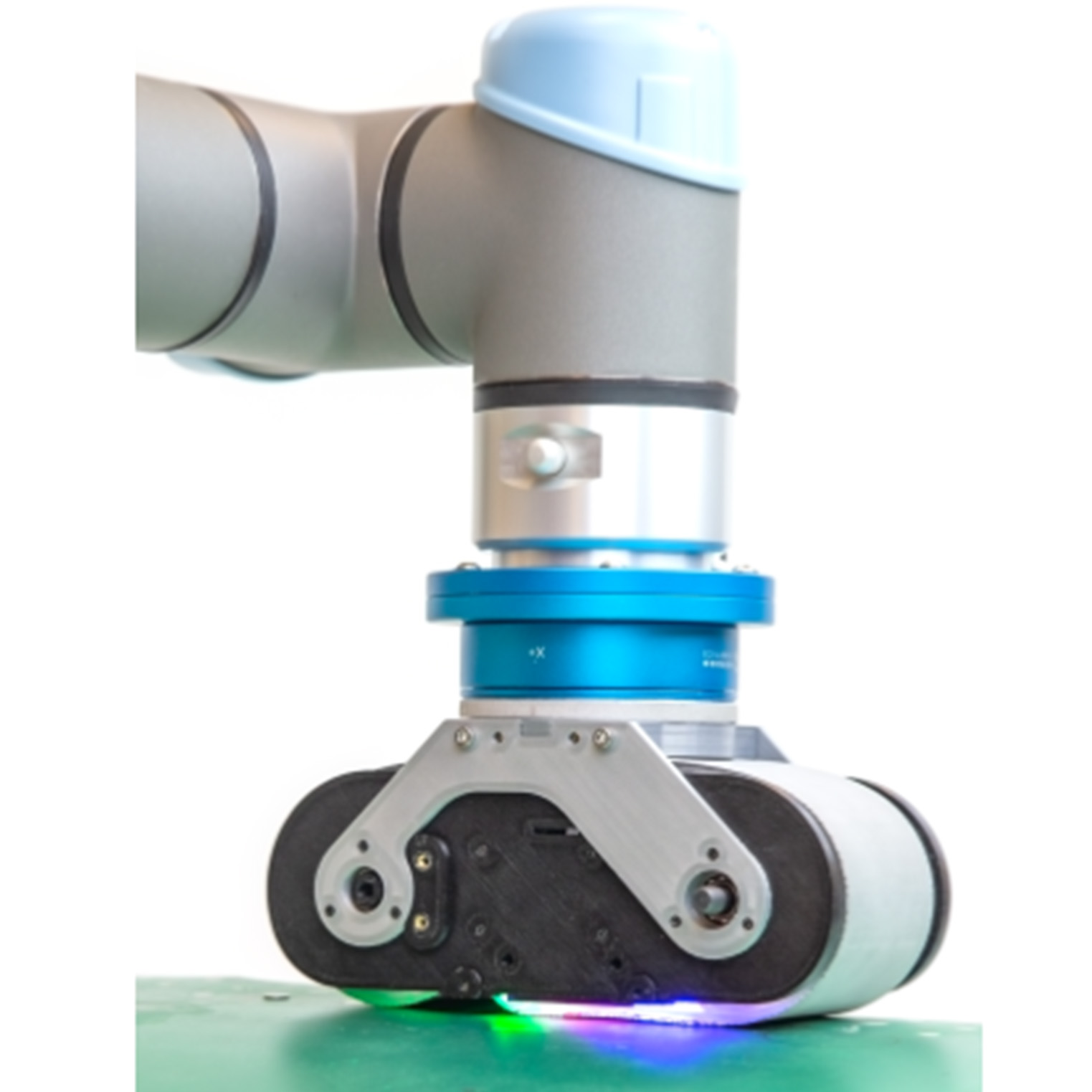

GelBelt: A Vision-based Tactile Sensor for Continuous Sensing of Large Surfaces

Scanning large-scale surfaces is widely demanded in surface reconstruction applications and detecting defects in industries' quality control and maintenance stages. Traditional vision-based tactile sensors have shown promising performance in high-resolution shape reconstruction while suffering limitations such as small sensing areas or susceptibility to damage when slid across surfaces, making them unsuitable for continuous sensing on large surfaces. To address these shortcomings, we introduce a novel vision-based tactile sensor designed for continuous surface sensing applications. Our design uses an elastomeric belt and two wheels to continuously scan the target surface. The proposed sensor showed promising results in both shape reconstruction and surface fusion, indicating its applicability. The dot product of the estimated and reference surface normal map is reported over the sensing area and for different scanning speeds. Results indicate that the proposed sensor can rapidly scan large-scale surfaces with high accuracy at speeds up to 45 mm/s.

2025, IEEE, Robotics and Automation Letters (RA-L)

Interactive Design of GelSight-Like Sensors with Objective-Driven Parameter Selection

Camera-based tactile sensors have shown great promise in dexterous manipulation and perception of object properties. However, the design process for vision-based tactile sensors (VBTS) is largely driven by domain experts through a trial-and-error process using real-world prototypes. In this work, we formulate the design process as a systematic and objective-driven design problem using physically accurate optical simulation. We introduce an interactive and easy-touse design toolbox in Blender, OptiSense Studio. The toolbox consists of (1) a set of five modularized widgets to express optical elements with user-definable parameters; (2) a simulation panel for the visualization of tactile images; and (3) an optimization panel for automatic selection of sensor designs. To evaluate our design framework and toolbox, we quickly prototyped and improved a novel VBTS sensor, GelBelt. We fully design and optimize GelBelt in simulation and show the benefits with a real-world prototype.

2024, 40th Anniversary of the IEEE Conference on Robotics and Automation (ICRA@40)

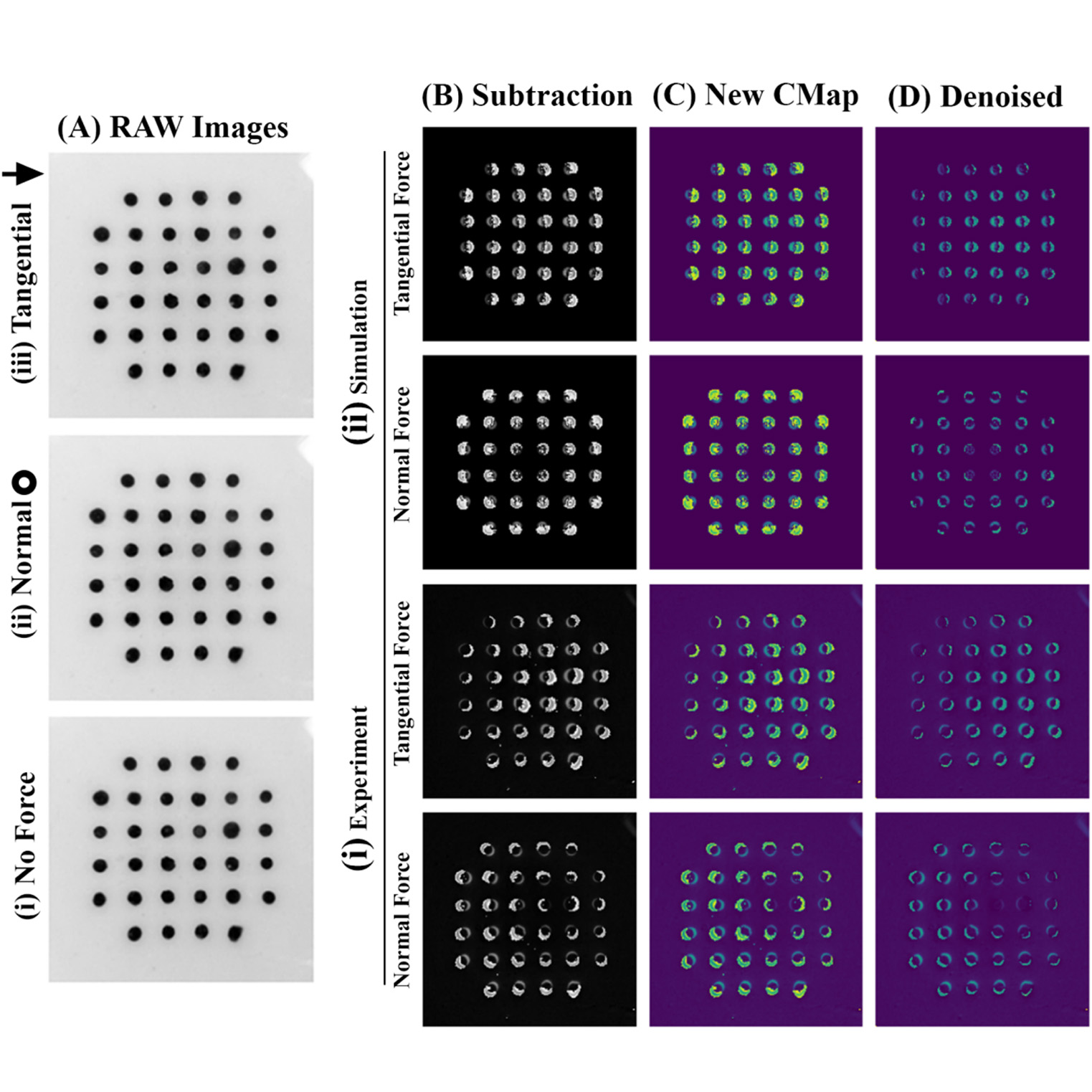

Multiphysics Simulation and Design Framework for Developing a Vision-based Tactile Sensor with Force Estimation and Slip Detection Capabilities

Recent achievements in the field of tactile sensing has enhanced the dexterity of robots interacting with their environments. Superior to other transduction mechanisms, vision-based tactile sensing enables high-resolution sensing of the contact surface. Accordingly, many vision-based tactile sensors have been introduced in recent years, all of which are designed and fabricated based on a trial-and-error approach with limited prior system modeling and simulation. In this study, we introduce a detailed framework for the development of a vision-based tactile sensor with markers motion mechanism. We start with the design and coupled simulation of the mechanical and optical subsystems followed by fabrication, testing, and validation of the proposed sensor. An uncertainty analysis is carried out during the design stage where various errors are propagated to determine the expected sensor accuracy. The simulated sensor showed a maximum error of 0.15 newtons with the error for the camera distance to the rigid membrane having the highest contribution. After fabrication of the sensor, it is observed that there is a very good agreement between simulation and experimental results. The sensor was tested for sensing tangential forces (up to slip) along two directions and normal force (up to 5 newtons) at 30 Hz rate with measurement errors (95% confidence interval) of 0.39 newton, 0.11 newton, and 0.12 newton, respectively. The sensor detected the slip in 97% of the tests with an average latency of 10 frames.

2024, Elsevier, Sensors and Actuators A: Physical

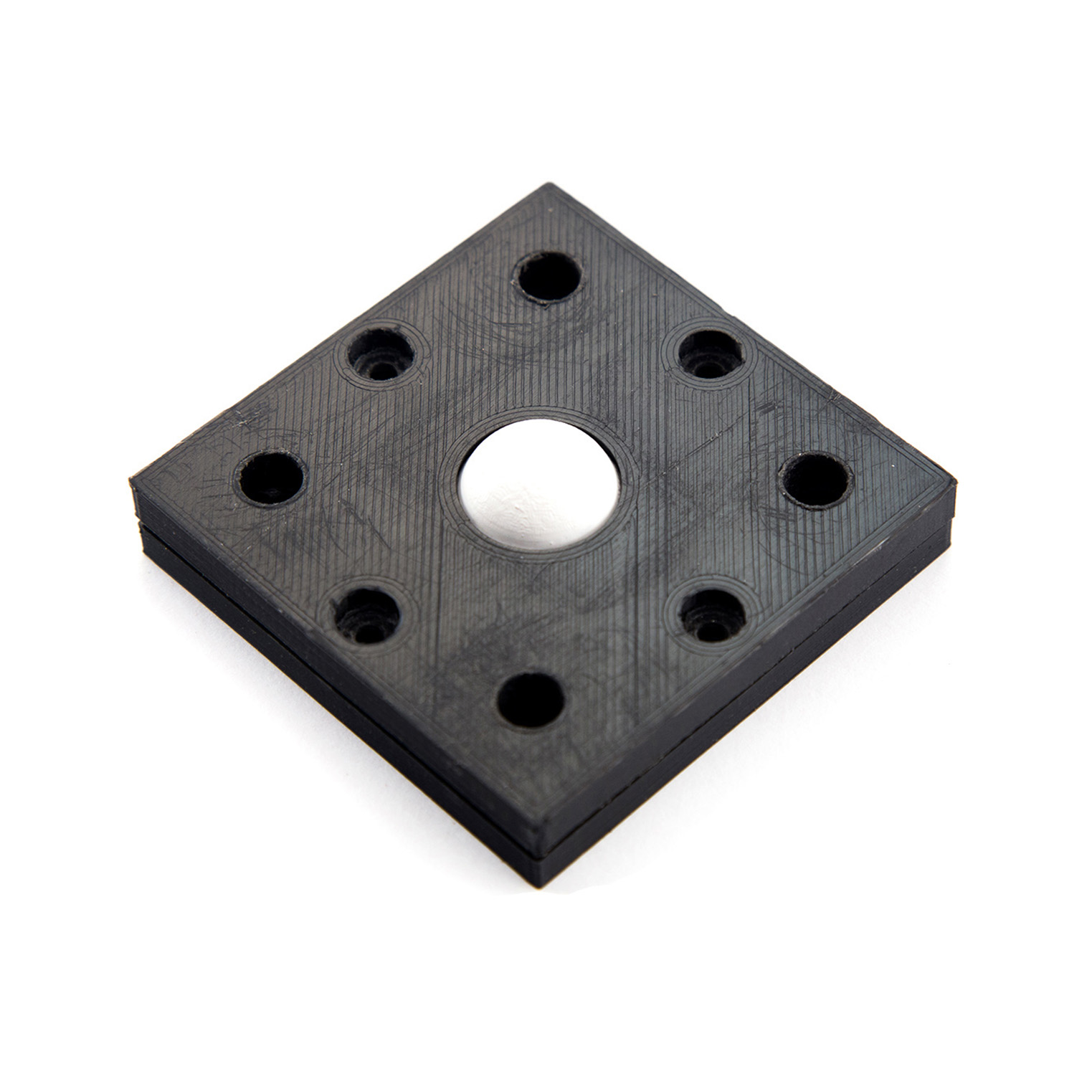

Design and Fabrication of a Vision-based Tactile Sensor for Robotic Manipulation

The sense of touch is an essential element for robotic arms in manipulating objects and their optimal interaction with the environment. Tactile sensors provide extensive quantitative and qualitative information about the contact between the sensor and the target object. With the advancements in machine vision and image processing, vision-based tactile sensors have been introduced as a more versatile sensing technology. This paper covers the steps of design, fabrication, and calibration of a vision-based tactile sensor. A brief introduction to optical simulation is presented and the convolutional neural networks model is used as the force estimation model for the simulation and experimental data. The fabricated sensor is capable of detecting normal-axis forces up to 5 newtons and tangential forces in two directions up to slip (approx. 1.4 times the applied normal force). The mean square error obtained for the built sensor is equal to 0.038 newtons in the vertical axis and 0.0033 and 0.0039 newtons in the two tangential axes on all the experimental data.

2023, IEEE, International Conference on Robotics and Mechatronics (ICRoM)

Design and Fabrication of a Soft Magnetic Tactile Sensor

Tactile sensing has always been a challenging area for robotic systems. Tactile sensors are required to provide necessary feedback on the contact surface between the robot and the target object to ease the manipulation and mimic the human touch. This paper covers a soft magnetic tactile sensor's design, calibration, and validation process. Employing the modeling techniques proposed in this study, one can easily adjust the mechanical characteristics of the sensor to meet the required specifications. A custom test rig is designed and built to apply known forces accurately and log the sensor's output to perform the calibration process. Moreover, the sensor's specifications and errors under high and low force slopes have been examined and reported. Finally, the sensor's transfer function has been estimated using its step response.

2022, IEEE, International Conference on Robotics and Mechatronics (ICRoM)

* Equal Contribution